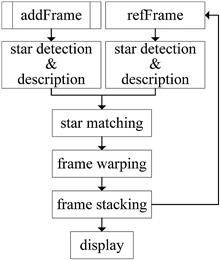

Image registration is an old topic but has a new application in deep-sky imaging fields named live stacking. In this Letter, we propose a live stacking algorithm based on star detection, description, and matching. A thresholding method based on Otsu and centralization is proposed to implement star detection. Then, a translation and rotation invariant descriptor is proposed to provide accurate feature matching. Extensive experiments illustrate that our proposed method is feasible in deep-sky image live stacking.

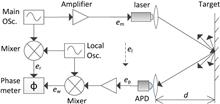

We present a phase difference range system with about 1.5 GHz of modulation frequencies. To reduce the phase difference drift, the high-frequency signals are synthesized by digital fractional phase-locked loops, and a differential measurement method is employed by introducing an optical switch. In order to eliminate the electrical crosstalk, a photoelectric modulator working as a mixer is used. The experimental results show that the distance error of the proposed system is within ±0.04 mm and the phase difference error is less than 0.15°.

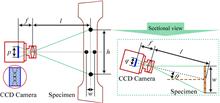

The existing two-dimensional vision measurement methods ignore lens distortion, require the plane to be perpendicular to the optical axis, and demand a complex operation. To address these issues, a new approach based on local sub-plane mapping is presented. The plane calibration is performed by dividing the calibration plane into sub-planes, and there exists an approximate affine invariance between each small sub-plane and the corresponding image plane. Thus, the coordinate transformation can be performed precisely, without lens distortion correction. The real comparative experiments show that the proposed approach is robust and yields a higher accuracy than the traditional methods.

It is found that application of Retinex color constancy algorithm in machine vision can weaken light interference on printing chromatic aberration. We propose a new method of fusion of Weighted Least Squares and Multi-Scale Retinex in the L*a*b* color space for the purpose of further enhancing the degree of recovery of prints colors. The effectiveness of the proposed method is tested against experiments on images of the same original print acquired in different illuminants. The method exhibits a good application prospect in machine vision in virtue of its great color consistency capability in maintaining the color of the original print.

Commercial iris biometric systems exhibit good performance for near-infrared (NIR) images but poor performance for visible wavelength (VW) data. To address this problem, we propose an iris biometric system for VW data. The system includes localizing iris boundaries that use bimodal thresholding, Euclidean distance transform (EDT), and a circular pixel counting scheme (CPCS). Eyelids are localized using a parabolic pixel counting scheme (PPCS), and eyelashes, light reflections, and skin parts are adaptively detected using image intensity. Features are extracted using the log Gabor filter, and finally, matching is performed using Hamming distance (HD). The experimental results on UBIRIS and CASIA show that the proposed technique outperforms contemporary approaches.

A high-precision automatic state monitoring and abnormity alarm technique is proposed to solve the process improvement issues of fiber-optic coil winding and splicing. Industrial cameras are used to capture optical and hot images during the assembly of optical components of a fiber-optic gyroscope. A line and contour analysis technique is used to detect abnormal winding. By analyzing the intensity distribution of transmitted light, the graph cut model and multivariate Gaussian mixture model are used to detect and segment the splicing defects. The practical applications indicate the correctness and accuracy of our vision-based technique.

A two-step method for pose estimation based on five co-planar reference points is studied. In the first step, the pose of the object is estimated by a simple analytical solving process. The pixel coordinates of reference points on the image plane are extracted through image processing. Then, using affine invariants of the reference points with certain distances between each other, the coordinates of reference points in the camera coordinate system are solved. In the second step, the results obtained in the first step are used as initial values of an iterative solving process for gathering the exact solution. In such a solution, an unconstrained nonlinear optimization objective function is established through the objective functions produced by the depth estimation and the co-planarity of the five reference points to ensure the accuracy and convergence rate of the non-linear algorithm. The Levenberg-Marquardt optimization method is utilized to refine the initial values. The coordinates of the reference points in the camera coordinate system are obtained and transformed into the pose of the object. Experimental results show that the RMS of the azimuth angle reaches 0.076o in the measurement range of 0o-90o; the root mean square (RMS) of the pitch angle reaches 0.035o in the measurement range of 0o-60o; and the RMS of the roll angle reaches 0.036o in the measurement range of 0o-60o.

Iris recognition technology recognizes a human based on his/her iris pattern. However, the accuracy of the iris recognition technology depends on accurate iris localization. Localizing a pupil region in the presence of other low-intensity regions, such as hairs, eyebrows, and eyelashes, is a challenging task. This study proposes an iris localization technique that includes a localizing pupillary boundary in a sub-image by using an integral projection function and two-dimensional shape properties (e.g., area, geometry, and circularity). The limbic boundary is localized using gradients and an error distance transform, and the boundary is regularized with active contours. Experimental results obtained from public databases show the superiority of the proposed technique over contemporary methods.

The pose estimation method based on geometric constraints is studied. The coordinates of the five feature points in the camera coordinate system are calculated to obtain the pose of an object on the basis of the geometric constraints formed by the connective lines of the feature points and the coordinates of the feature points on the CCD image plane; during the solution process, the scaling and orthography projection model is used to approximate the perspective projection model. The initial values of the coordinates of the five feature points in the camera coordinate system are obtained to ensure the accuracy and convergence rate of the non-linear algorithm. In accordance with the perspective projection characteristics of the circular feature landmarks, we propose an approach that enables the iterative acquisition of accurate target poses through the correction of the perspective projection coordinates of the circular feature landmark centers. Experimental results show that the translation positioning accuracy reaches ±0.05 mm in the measurement range of 0–40 mm, and the rotation positioning accuracy reaches ±0.06o in the measurement range of 4o–60o.